In a remarkable leap for artificial intelligence — and for marine science — Google has announced the development of DolphinGemma, an advanced AI model designed to learn and mimic the complex vocalizations of dolphins. The announcement, shared via an official Google blog post, marks a major step forward in the quest for interspecies communication.

Unveiled fittingly on National Dolphin Day, DolphinGemma reflects the intersection of cutting-edge AI technology and decades of field research. Developed in collaboration with Georgia Tech and the Wild Dolphin Project (WDP), the AI model represents a pioneering attempt to understand dolphin communication patterns deeply enough to predict, and even generate, realistic dolphin-like sounds.

An AI Model Inspired by Natural Conversations

Understanding dolphins has always been a scientific frontier. Their rich acoustic world — composed of signature whistles, burst-pulses, and echolocation clicks — has long suggested a sophisticated form of social communication, but decoding it has remained a daunting task.

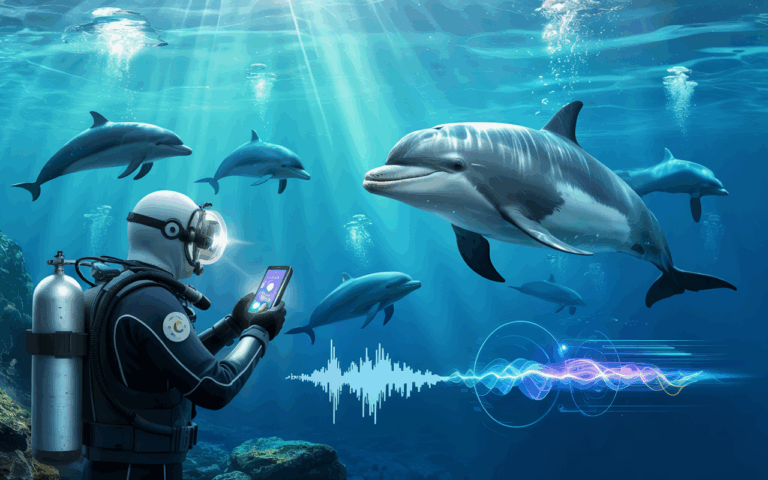

Now, Google’s DolphinGemma enters the scene. Built upon the company’s lightweight yet powerful Gemma architecture, which also powers its human-language Gemini models, DolphinGemma uses Google’s SoundStream tokenizer to efficiently process the intricate audio patterns of dolphin sounds. With around 400 million parameters, the model is compact enough to operate directly on field smartphones — namely, Google Pixel devices.

DolphinGemma works by analyzing sequences of real dolphin sounds, identifying patterns, and predicting the next likely sounds, much like how AI language models predict words in human conversation. This predictive ability can help researchers uncover hidden structures within dolphin communications that might otherwise remain invisible to human analysis.

Powered by Decades of Field Data

The foundation for this breakthrough is decades of research by the Wild Dolphin Project. Since 1985, WDP has compiled an unmatched database of underwater audio and video recordings, meticulously tied to individual dolphin identities and observed behaviors. By linking specific sounds to social actions — whether a mother calling her calf, a squabble, or courtship — WDP provided the perfect labeled dataset for AI training.

Rather than trying to replace human researchers, DolphinGemma augments their work. The model’s ability to sift through vast acoustic datasets in search of recurring patterns allows scientists to focus on deeper interpretation and new hypotheses about dolphin society and intelligence.

Pixel Phones Turned Underwater AI Labs

Alongside DolphinGemma, researchers have also been refining the CHAT (Cetacean Hearing Augmentation Telemetry) system — an underwater computer that uses AI to enable two-way communication between dolphins and humans.

Previously powered by Pixel 6 devices, the next iteration will integrate Google’s Pixel 9, leveraging its advanced deep learning capabilities to analyze dolphin sounds in real time, detect mimics of synthetic whistles, and respond appropriately — all while submerged in the open ocean.

The integration of mobile AI drastically cuts down on size, power needs, and costs — an essential advantage for research in unpredictable marine environments. And by combining DolphinGemma’s predictive analysis with real-time interaction tools, scientists hope to make interactions with dolphins more natural, immediate, and meaningful.

Looking Ahead: Open Sourcing DolphinGemma

In an exciting move for the scientific community, Google also announced plans to open source DolphinGemma this summer. While initially trained on Atlantic spotted dolphin data, the model’s framework could be adapted for studies of other cetacean species, such as bottlenose or spinner dolphins, with additional fine-tuning.

The open model approach signals Google’s commitment to fostering global collaboration in AI-driven science, giving researchers powerful new tools to accelerate the search for meaning within the oceans’ most fascinating conversations.

“We’re not just listening anymore. We’re beginning to understand the patterns,” the blog post notes — capturing the spirit of a breakthrough that may one day allow humans and dolphins to truly “talk.”

As DolphinGemma embarks on its first real-world field season, humanity stands on the brink of a new era in understanding the intelligence of our fellow inhabitants of the sea — not through imagination alone, but through the transformative potential of AI.